Future iPhones and iPads could move Apple's Touch ID fingerprint sensor from the device's home button to the display itself, allowing a more seamless and potentially dynamic way for a device to securely authenticate a user.

The possible future of Touch ID was revealed in a new patent application published on Thursday by the U.S. Patent and Trademark Office entitled "Fingerprint Sensor in an Electronic Device." Specifically, the filing describes how Apple might include a fingerprint sensor into the display stack on a device like an iPhone.

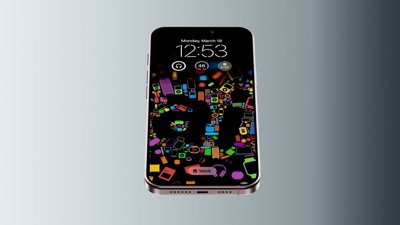

Apple's latest proposed invention related to a touchscreen Touch ID would allow the device to capture a single fingerprint at a pre-determined fixed location on the display. For example, an iPhone's lock screen or a third-party application could ask a user to place their finger in a specific spot on the screen in order to scan it and verify their identity.

Apple's system could also go even further, capturing a single fingerprint from any location on the display, or even scanning and identifying multiple fingerprints at once. By using the full space of an iPad, for example, Apple's advanced Touch ID system could enhance security by scanning all five of a user's fingerprints from one hand at the same time, or even a full palm print.

In the filing, Apple notes that fingerprint sensors embedded into displays in the past have degraded image quality on the screen by adding additional layers over the display. Such fingerprint scanning systems can also reduce the ability of the screen to sense traditional touch inputs.

Apple's proposed solution suggests that the fingerprint sensor for a display could be implemented as an integrated circuit connected to the bottom surface of a cover sheet, near the bottom surface, or connected to a top surface of the display. Apple also says that another method could place the fingerprint sensor as a full panel on the display.

In the filing, Apple notes that its full-panel fingerprint sensor could also serve as a touch sensing device, allowing the same technology to not only detect touch input, but also scan for fingerprints.

Apple's filing notes that its implementation could utilize "substantially transparent conductive material such as indium tin oxide" to address issues with display clarity on a full-screen fingerprint sensor.

Apple's interest in in-display fingerprint sensor technology is not new — the company Apple acquired to create Touch ID in the iPhone and iPad, AuthenTec, has done extensive research on the subject. One such AuthenTec patent subsequently assigned to Apple was revealed in 2013, before the iPhone 5s introduced Touch ID to the world.

Both the 2013 patent and this week's newly unveiled filing are credited in part to Dale Setlak, co-founder of AuthenTec. Other inventors listed in the filing are Marduke Yosefpor, Jean-Marie Bussat, Benjamin B. Lyon, and Steven P. Hotelling.

Apple acquired AuthenTec in 2012, setting the stage for Touch ID, which in its current implementation is limited to the home button on the iPhone and iPad.

Neil Hughes

Neil Hughes

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

Andrew O'Hara

Andrew O'Hara

41 Comments

I guess we can expect a news release from Samsung any moment claiming they will be doing the same thing.

Finally the end of the home button is coming. This will give more screen real estate in a smaller form factor.

so how would you unlock your phone?

by just touching the glass? would that lead to more unintentional unlocking?

If Apple did something like LG does, double tap the screen and the screen turns on and unlocks the phone, Apple adding the Touch ID for that under the screen would be nice. Then you don't have any unintentional unlocking. Or possibly using Taptic feedback (pressure on the screen) to act as the button. Or maybe they will have the button imbedded into the housing where you add pressure say above the lightning port and it acts as a home button(there have been patents for this, not for say a home button, but for any part of the housing to be a button and would allow developers to create buttons on the device for games, i think that should explain it without me knowing too much about it?).

I love the physical Home Button on the iPhone. I think it's great for orienting the phone in your hand without looking - e.g. when you're fishing it out of your pocket while driving or while in a dark room. With it, I can give Siri a command without ever looking at the phone. How would you do these things without a physical button? Phones are pretty featureless slabs these days - without that button, you'd have to search for the power or volume buttons to orient the phone blindly. Some are apt to suggest haptic feedback (e.g. a vibrate wen you're over the button) - but that seems like a waste of precious electricity.

I'm reading the comments here, and I'd like to remind you all that in the picture, Apple atill shows the home button. What that means is unclear. But if it entails clicking the button first, it would be a step backwards. I'm not so sure how practical a screen sensor would be. Apple themselves point out the problems. The current Touch sensor has a Rez of, I believe, 525 PPI. The sensor therefor would need to be rather large, possibly the entire screen, at that Rez. How much will that cost?