New tech promises to add terabytes of memory (or SSD storage) to any GPU — but there's a big catch

Panmnesia's CXL-GPU prototype shows the technology works

KAIST startup Panmnesia (the name means “the power to remember absolutely everything one thinks, feels, encounters, and experiences”) claims to have developed a new approach to boosting GPU memory.

The company's breakthrough allows for the addition of terabyte-scale memory using cost-effective storage media such as NAND-based SSDs while maintaining reasonable performance levels.

However, there's a catch: the technology relies on the relatively new Compute Express Link (CXL) standard, which remains unproven in widespread applications and requires specialized hardware integration.

Technical challenges remain

CXL is an open standard interconnect designed to efficiently connect CPUs, GPUs, memory, and other accelerators. It allows these components to share memory coherently, meaning they can access shared memory without requiring data to be copied or moved, reducing latency and increasing performance.

Because CXL is not a synchronous protocol like JEDEC’s DDR standard, it can accommodate various storage media types without requiring exact timing or latency synchronization. Panmnesia says initial tests have shown that its CXL-GPU solution can outperform traditional GPU memory expansion methods by more than three times.

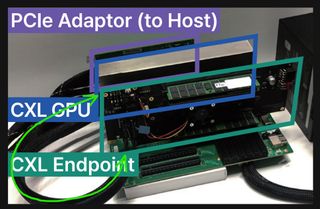

For its prototype, Panmnesia connected the CXL Endpoint (which includes terabytes of memory) to its CXL-GPU via two MCIO (Multi-Channel I/O) cables. These high-speed cables support PCIe and CXL standards, driving efficient communication between GPU and memory.

Adoption, however, may not be straightforward. The GPU cards may need additional PCIe/CXL-compatible slots, and significant technical challenges remain, particularly with the integration of CXL logic fabric and subsystems in current GPUs. Integrating new standards like CXL into existing hardware involves ensuring compatibility with current architectures and developing new hardware components, such as CXL-compatible slots and controllers, which can be complex and resource-intensive.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

While Panmnesia's new CXL-GPU prototype potentially promises unparalleled memory expansion for GPUs, its reliance on the emerging CXL standard and the need for specialized hardware could create obstacles for immediate widespread adoption. Despite these hurdles, the benefits are clear, especially for large-scale deep learning models which often exceed the memory capacity of current GPUs.

More from TechRadar Pro

Wayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.

Most Popular