Nvidia's Next-Gen Blackwell GPUs Rumored to Use Multi-Chiplet Design

Big things can only get bigger?

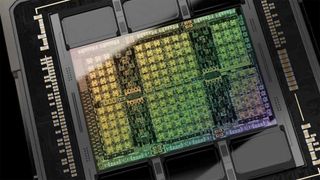

Unlike its rivals AMD and Intel, Nvidia yet has to implement a multi-chiplet design for high-performance compute GPUs, but it looks like the company might be on track to finally use this design layout with its next-generation Blackwell generation of GPUs. At least so says renowned hardware leaker @kopite7kimi, who tends to have accurate information about Nvidia's intentions. For now, this is all a pure speculation though.

"After the dramas of GA100 and GH100, it seems that GB100 is finally going to use MCM," @kopite7kimi wrote in an X post. "Maybe GB100=2*GB102."

Nvidia's GA100 and GH100 compute GPUs feature a die size 826 mm² and 814 mm², which is very close to the maximum reticle size of 858 mm2. Producing such large chips with good yields is hard, but it looks like TSMC does it pretty well since Nvidia literally ships tons of H100 and A100 compute GPUs every quarter.

While Nvidia has consistently managed to increase performance of its compute GPUs quite tangibly with every new generation so far, reticle size is still an issue. Using a multi-chiplet design would enable Nvidia to add more transistors into its next-generation compute GPU and increase performance gain over its H100 more significantly than it would do with architectural improvements alone.

Since both AMD and Intel have already adopted multi-chiplet designs for their compute GPUs and will only further increase chiplet and transistor count going forward, Nvidia might not have a choice but to embrace a multi-tile design too. It remains to be seen whether the company would go with a dual-chiplet (like AMD's Instinct MI250) or multi-chiplet (like AMD's Instinct MI300 or Intel's Ponte Vecchio) approach, but the company just cannot ignore advantages presented by modern packaging technologies.

If we continue to speculate, we might assume that Nvidia will adopt multi-tile design only for its Blackwell GPUs for AI and HPC compute, whereas its gaming GPUs will remain monolithic. This might make sense since making two GPUs work in parallel is hard. But then again, Nvidia might not be able to ignore multi-chiplet designs even for client PCs in the High-NA era since ASML's next-generation scanners will halve reticle size (to 429 mm2) and Nvidia will not be able to address high-end gaming machines with monstrous monolithic GPUs like the AD102 (609 mm2) unless they use at least two chiplets.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

-Fran- I can absolutely see nVidia saying "ah, reticle size limit... let's use MCM to go even bigger". AMD's take is "let's keep costs in check".Reply

I can't say I dislike nVidia's mentality here: go bigger or go home. Given the markets they want to attack and cover, they're most definitely incentivised to go that way.

Interesting times ahead on how AMD and nVidia will approach MCM.

Regards. -

NeoMorpheus Reply

And guess what will be said by the Tom's reviewer assigned to this review?Argolith said:Looking forward to being able to play 1080p Medium with my 12.000$ entry-level GPU.

"Just buy it!"

Edit actually, he just posted in another thread stating that multi-chipplet designs are simply worse, so I want to see how that will change... lol -

When the new Nvidia flagship GPU gets released, i hope it's as quantum a leap in performance as 4090 was compared to 3090.Reply

Most Popular