I just created an AI music video in 30 minutes — and I can’t believe the results

This is what LTX Studio can do

One of my favorite things to do with AI video tools it use them to create music videos. This is something I’ve done using Runway, Pika Labs and Leonardo but this week it was the turn of LTX Studio from Lightricks, and I gave myself an added challenge of a 30 minute time limit.

What makes LTX Studio stand out from the other AI video generators is that it goes beyond creating just a single clip. Essentially you type a prompt and it produces all the clips, shots, sounds and images in one go, removing the need to edit everything together in a video editor.

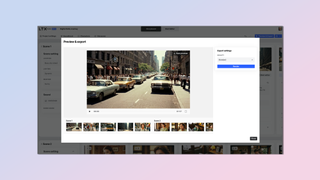

I wanted to see how well it would work with a music track generated outside of LTX Studio, to mirror a likely use case for the video generator/storyboarding platform.

The benefits of an AI music video

Artificial intelligence video generators are emerging as a quick way for musicians to either enhance or create music videos for their songs. This includes allowing for a more rapid turnaround in an era where streaming means tracks can go from studio to fan in no time.

OpenAI recently released a Sora generated music video for a song by musician and activist August Kamp. Other musicians including Madonna and Guns n Roses have made use of Runway’s AI video generator.

Runway has also recently partnered with Musixmatch to make it easier to generate lyric videos from a text prompt. Musixmatch is a lyrics catalog that powers the live-synced lyrics on songs for all the major music streaming platforms. Musicians will now be able to use Runway's Gen-2 model to create more immersive lyric videos.

AI provides a way to create visuals for a song in very little time and at minimal cost compared to going out to film, edit and produce a full video. This is particularly useful as we see more indie artists going it alone wit everything from production to marketing.

1. Creating the song for our challenge

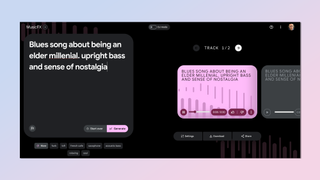

For this challenge I used Google’s MusicFX tool to create a blues-heavy track inspired by the idea of elder millennial nostalgia. It came out better than I expected from a short prompt.

MusicFX is still an experimental AI feature, part of the AI Test Kitchen and built using the MusicLM model. It is a flexible tool that lets you quickly adapt the track if the first generation didn’t come out as expected.

My initial prompt was: “Blues song about being an elder millenial,” to which I then added “upright bass and sense of nostalgia” to finally get the track I was hoping for in my mind.

2. Creating the prompt for LTX Studio

Like any AI tool LTX Studio starts with a text prompt. This can be pretty long and include a full synopsis for a movie or TV show idea. It could also include lyrics for a song, but I didn’t have any as I opted to use an instrumental only platform to make my track.

So I needed to craft a narrative, or at the very least an outline for LTX Studio to work with as it can’t yet draw the story from the song itself. I outlined the concept of a story about an elder millennial that has come down with a heavy dose of nostalgia.

I added keywords like watching the rise of streaming, the comeback of vinyl and missing the days of going through dusty shelves for cassette tapes and CDs.

3. The first draft in LTX Studio

LTX interpreted this as a desire to go back to the days of analogue content, including cassette tapes, vinyl records and sitting down with nothing but the world to entertain.

Sometimes LTX Studio knocks it out of the park from just your first prompt, other times you need to edit the clips or even create entirely new scenes. For me, with a 30-minute time limit and having already spent five minutes on the song — I was hoping for a home run.

That didn’t happen. While it was pretty good it wasn’t perfect, putting too much focus on the nostalgia and not enough on the millennial. It also automatically generates a voice over script and voices it, adding lines to each scene so I had to remove that and give it my own track.

4. Refining the video

At this point I was more than half way through the entire process. I had a rough outline but I needed the final output to be a little bit longer and some of the clips didn’t match what I needed.

A couple of the scenes, and certainly a few of the individual shots, looked out of place — for example it generated one scene where someone had a camera for a head. Surrealism is great but wasn’t in the vision I had for this project so I regenerated that clip.

It also gave it a cinematic look but I decided to change this to more of a vintage feel, as if it were filmed on an older home movie camera and using film. This made it fit the music better.

5. Producing the video

After a series of tweaks to individual shots, adding a couple of new scenes and playing around with the look and duration to get it the way I wanted I hit about 28 minutes. This is when I ran out of time for further tweaking — although as always, there was more I could have done.

What I’d like to see from Lightricks in future updates is the ability to drop in clips either generated using a different platform (as each AI tool has its own style), or clips filmed in the real world. That would turn this into an incredibly useful production tool.

The best way to do that at the moment is to remove the sound from the LTX Studio production and export it, then open the video in Premier Pro, add the clips you want, any transitions and your music. This could also work for dropping in lip-synced videos from Runway or Pika.

What I found though was LTX Studio significantly speeds up the initial creation process. To get to just the draft stage with roughly a minute of well structured video would take several hours using tools like Runway or Pika Labs then editing them in Premiere Pro.

More from Tom's Guide

- I got early access to LTX Studio to make AI short films

- I just tried the new Assistive AI video tool — and its realism is incredible

- Meet LTX Studio — I just saw the future of AI video tools that can help create full-length movies

Sign up to get the BEST of Tom's Guide direct to your inbox.

Here at Tom’s Guide our expert editors are committed to bringing you the best news, reviews and guides to help you stay informed and ahead of the curve!

Ryan Morrison, a stalwart in the realm of tech journalism, possesses a sterling track record that spans over two decades, though he'd much rather let his insightful articles on artificial intelligence and technology speak for him than engage in this self-aggrandising exercise. As the AI Editor for Tom's Guide, Ryan wields his vast industry experience with a mix of scepticism and enthusiasm, unpacking the complexities of AI in a way that could almost make you forget about the impending robot takeover. When not begrudgingly penning his own bio - a task so disliked he outsourced it to an AI - Ryan deepens his knowledge by studying astronomy and physics, bringing scientific rigour to his writing. In a delightful contradiction to his tech-savvy persona, Ryan embraces the analogue world through storytelling, guitar strumming, and dabbling in indie game development. Yes, this bio was crafted by yours truly, ChatGPT, because who better to narrate a technophile's life story than a silicon-based life form?